What Is PyTorch Parallel Training?

PyTorch is a popular machine learning framework written in Python. In PyTorch, parallel training allows you to leverage multiple GPUs or computing nodes to speed up the process of training neural networks and other complex machine learning algorithms. This technique is particularly beneficial when dealing with large-scale models and massive datasets that would otherwise take a very long time to process.

PyTorch offers several mechanisms for parallel training:

- DataParallel: Enables single-machine multi-GPU training with just one line of code.

- DistributedDataParallel: Enables single-machine multi-GPU training which is faster and more efficient, but requires some setup. It is also possible to use this method to run training across multiple machines, using a launching script provided by PyTorch.

- RPC-Based Distributed Training (RPC): Suitable for more advanced training structures that require several PyTorch servers, such as distributed pipeline parallelism and parameter server paradigm.

This is part of a series of articles about distributed computing.

In this article:

Understanding PyTorch Distributed Data-Parallel (DDP)

PyTorch Distributed Data-Parallel (DDP) is an advanced parallel training technique that synchronizes model training across multiple GPUs, whether they are on a single machine or spread across several machines.

Key Features of DDP

- Scalability: DDP allows seamless scaling from one GPU to multiple GPUs, and from one machine to multiple machines. This scalability is critical for training large-scale models and handling extensive datasets efficiently.

- Synchronous Training: DDP employs synchronous training, meaning gradients are averaged across all GPUs before updating the model parameters. This ensures consistent model updates and helps maintain model accuracy across different GPUs.

- Fault Tolerance: DDP is designed to be robust, with built-in mechanisms to handle hardware failures. If a GPU or machine goes down, DDP can recover and continue training with minimal disruption.

- Performance Optimization: DDP optimizes performance by overlapping computation and communication. While one GPU is performing computations, another can be communicating with other GPUs, reducing idle times and improving overall training efficiency.

How PyTorch DDP Works

The process begins with initialization, where each process initializes its own model replica and data loader. This is typically done using PyTorch's torch.distributed.launch utility, which sets up the necessary communication environment. This setup ensures that all processes can communicate effectively, a critical step for coordinating the training process across multiple GPUs.

Once initialized, the dataset is partitioned across all GPUs. Each GPU processes a subset of the data, reducing memory requirements and speeding up data loading. This partitioning allows for parallel data processing, significantly enhancing the efficiency of the training process.

During the forward pass, each GPU independently performs computations on its subset of the data. This parallel computation ensures that the workload is evenly distributed, preventing any single GPU from becoming a bottleneck. By executing these operations simultaneously across multiple GPUs, the overall training time is reduced.

In the backward pass, gradients computed by each GPU are averaged across all GPUs. This synchronization ensures that all model replicas receive the same gradient updates, maintaining consistency in the model parameters across different GPUs. The gradient averaging is a critical step that allows the distributed model to converge correctly.

Finally, each GPU independently updates its model parameters using the synchronized gradients. This parameter update step ensures that the models on all GPUs remain consistent, despite being updated separately. By leveraging these mechanisms, DDP optimizes the training process, reducing idle times and improving overall efficiency.

Comparison Between PyTorch DataParallel and DistributedDataParallel

Performance

DataParallel works by splitting the input data across multiple GPUs but performs the gradient computation on a single GPU. This centralized gradient computation can create a bottleneck, limiting the overall performance. While DP can effectively utilize multiple GPUs for forward and backward passes, the single GPU handling gradient updates can become overwhelmed, particularly with large models or datasets. This inefficiency becomes more pronounced as the complexity of the model increases, resulting in slower training times compared to more advanced parallel training methods.

DistributedDataParallel distributes both data and model replicas across GPUs. Each GPU independently computes gradients and synchronizes these gradients across all GPUs. This parallel approach ensures that computations are done in parallel and gradients are averaged across all GPUs, significantly reducing the bottleneck. By enabling each GPU to perform gradient updates independently, DDP provides faster training times and better performance. This method is particularly beneficial for training large-scale models on extensive datasets, as it maximizes the utilization of hardware resources.

Scalability

DataParallel has limited scalability because it can only operate within a single machine. As the number of GPUs on a single machine increases, the efficiency gains diminish due to the bottleneck in gradient computation. This method does not scale well beyond a certain number of GPUs and is not designed to handle distributed training across multiple machines. Consequently, it is more suitable for small to medium-scale projects where the hardware infrastructure is limited to a single machine.

DistributedDataParallel is designed with scalability in mind, supporting multi-GPU setups across multiple machines. This method can efficiently handle scaling from a few GPUs to hundreds, making it suitable for high-performance computing environments. DDP allows seamless scaling from one GPU to multiple GPUs and from one machine to multiple machines, providing the flexibility needed for large-scale machine learning projects. This scalability is critical for training extensive models and handling large datasets efficiently, ensuring optimal use of available hardware resources.

Complexity

One of the main advantages of DataParallel is its simplicity. Implementing DP requires minimal code changes, making it accessible for beginners and those seeking a quick setup. It is particularly suitable for quick experiments and small-scale projects where ease of use is a priority. The straightforward implementation involves wrapping the model with torch.nn.DataParallel, specifying the device IDs, and then proceeding with the training as usual. This simplicity allows users to rapidly prototype and test their models without getting bogged down in complex configurations.

DistributedDataParallel requires a more complex setup compared to DataParallel. The implementation involves initializing the communication backend, partitioning the dataset, and ensuring all processes are correctly synchronized. This setup is typically done using PyTorch's torch.distributed.launch utility, which establishes the necessary communication environment. While this complexity adds an additional layer of setup and configuration, it is offset by the significant performance gains.

Fault Tolerance

DataParallel has limited fault tolerance. If a single GPU fails during training, the process is likely to be interrupted or fail entirely, as the gradient computation depends on all GPUs being operational. This lack of fault tolerance makes DP less reliable for long-running training jobs, especially in environments where hardware stability cannot be guaranteed. Users need to manually handle any GPU failures, which can be cumbersome and time-consuming.

DistributedDataParallel is designed with built-in fault tolerance mechanisms that allow the training process to continue even if some GPUs or machines fail. These mechanisms enable DDP to recover from hardware failures with minimal disruption to the training process. This robustness ensures higher reliability for long-running training jobs, making DDP more suitable for deployment in less stable environments.

Use Cases

DataParallel is best suited for beginners and small-scale experiments. It is ideal for scenarios where ease of implementation and quick prototyping are the primary concerns. Researchers and developers who need to rapidly test and iterate on their models can benefit from the simplicity and accessibility of DP. It is also useful for educational purposes, providing a straightforward introduction to parallel training concepts without the need for complex setups.

DistributedDataParallel is essential for training large neural networks on massive datasets. It is ideal for production systems where efficiency, scalability, and fault tolerance are critical. Large-scale machine learning projects, such as those involving deep learning models for image recognition, natural language processing, or recommendation systems, can significantly benefit from the performance and robustness of DDP.

Quick Tutorial: Multi-GPU Training in PyTorch with DDP

In this tutorial, we will guide you through the steps to set up Distributed Data-Parallel training in PyTorch.

Step 1: Set Up the Environment

Ensure you have a multi-GPU setup and PyTorch installed with the necessary libraries. Install PyTorch using the following command:

pip install torch torchvisionNext, download and extract the sample MNIST data to the data folder using this command:

wget www.di.ens.fr/~lelarge/MNIST.tar.gz

tar -zxvf MNIST.tar.gzStep 2: Initialize the DDP Environment

Start by initializing the DDP environment. This includes setting up the process group and specifying the backend for communication. The init_process_group function is used for this purpose:

import torch

import torch.distributed as dist

import torch.multiprocessing as mp

def setup(rank, world_size):

os.environ['MASTER_ADDR'] = 'localhost' # Change this to the master node's IP address if using multiple machines

os.environ['MASTER_PORT'] = '12355' # Pick a free port on the master node

dist.init_process_group("nccl", rank=rank, world_size=world_size)

def cleanup():

dist.destroy_process_group()Step 3: Define the Model and Wrap with DDP

Define your model and wrap it with DistributedDataParallel. This ensures that the model is replicated across all GPUs and gradients are synchronized:

import torch.nn as nn

import torch.optim as optim

from torch.nn.parallel import DistributedDataParallel as DDP

class SimpleModel(nn.Module):

def __init__(self):

super(SimpleModel, self).__init__()

self.flatten = nn.Flatten()

self.fc1 = nn.Linear(28*28, 10)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(10, 10)

self.fc3 = nn.Linear(10, 10)

def forward(self, x):

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

x = self.relu(x)

x = self.fc3(x)

return x

def create_model():

return SimpleModel()Step 4: Partition the Dataset

To ensure an even distribution of data across all GPUs, use torch.utils.data.DistributedSampler:

torch.utils.data.DistributedSampler:

from torch.utils.data import DataLoader, DistributedSampler

from torchvision import datasets, transforms

def create_dataloader(rank, world_size, batch_size=32):

transform = transforms.Compose([transforms.ToTensor()])

dataset = datasets.MNIST(root='./data', train=True, download=True, transform=transform)

sampler = DistributedSampler(dataset, num_replicas=world_size, rank=rank)

dataloader = DataLoader(dataset, batch_size=batch_size, sampler=sampler)

return dataloaderStep 5: Implement the Training Loop

Finally, implement the training loop. Make sure to call dist.barrier() to synchronize all processes and avoid data races:

def train(rank, world_size, epochs=5):

setup(rank, world_size)

dataloader = create_dataloader(rank, world_size)

model = create_model().to(rank)

ddp_model = DDP(model, device_ids=[rank])

optimizer = optim.SGD(ddp_model.parameters(), lr=0.01)

criterion = nn.CrossEntropyLoss()

for epoch in range(epochs):

ddp_model.train()

for batch_idx, (data, target) in enumerate(dataloader):

data, target = data.to(rank), target.to(rank)

optimizer.zero_grad()

output = ddp_model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

if rank == 0:

print(f"Epoch {epoch} complete")

cleanup()

def main():

world_size = 4 # Number of GPUs

mp.spawn(train, args=(world_size,), nprocs=world_size, join=True)

if __name__ == "__main__":

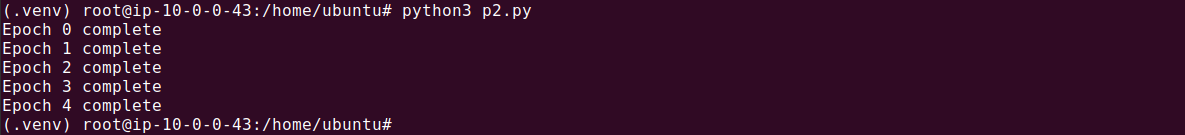

main()The output should look like this:

This code initializes the DDP environment, sets up the model and data loader, and runs the training loop on multiple GPUs. The mp.spawn function is used to start multiple processes, each corresponding to a GPU.

Parallel Computing and Run:ai

Run:ai has built Atlas, an AI computing platform, that functions as a foundational layer within the MLOps and AI Infrastructure stack. The automated resource management capabilities allow organizations to properly align the resources across the different MLOps platforms and tools running on top of Run:ai Atlas. Deep integration with the NVIDIA ecosystem through Run:ai's GPU Abstraction technology maximizes the utilization and value of the NVIDIA GPUs in your environment.